Explore the benefits, challenges, event-driven process orchestration: a practitioner’s viewpoint. Learn how to optimize workflows in dynamic environments.

Introduction

Event-driven process orchestration is an essential strategy for businesses aiming to manage complex workflows efficiently. In today’s fast-paced and dynamic environments, organizations require systems that can respond and adapt in real-time to fluctuating conditions. As a practitioner, understanding the core principles of this approach and how to implement it effectively can make all the difference in achieving operational success. This post delves into the key concepts, benefits, challenges, and strategies of event-driven process orchestration, offering practical insights for organizations considering this approach.

What is Event-Driven Process Orchestration?

Event-driven process orchestration refers to the method of coordinating workflows and business processes based on events that occur within a system. These events can originate from multiple sources, such as user interactions, external systems, or IoT devices. This dynamic model contrasts with traditional request-driven architectures, which often rely on scheduled tasks or manual interventions. By focusing on real-time event detection, organizations can ensure that processes are executed swiftly and efficiently, maintaining the responsiveness needed in today’s competitive landscape.

Key Concepts in Event-Driven Process Orchestration

To understand event-driven process orchestration fully, it is crucial to explore the underlying concepts that make it effective. First, event detection plays a central role. Organizations must monitor various touchpoints within their systems, including sensors, web applications, APIs, and user interfaces, for events that indicate a change in state or require action. These events can range from a customer placing an order, a sensor detecting a change in temperature, or a system alert indicating an error. Once detected, the system responds by executing specific actions, which are part of a larger workflow.

The second key concept is process orchestration. Once an event is detected, the system must manage the sequence of tasks and activities necessary to complete the business process. This may involve coordinating multiple services or systems in a particular order, ensuring that dependencies between tasks are respected. The orchestration engine handles the flow of work, ensuring that the process adheres to business rules and goals, while also managing exceptions and errors that may arise.

Finally, the concept of dynamic response is critical in an event-driven architecture. Unlike traditional systems, which operate on predefined schedules, event-driven systems respond to new data and conditions in real-time. This allows businesses to remain agile and adaptive, reacting to changes as they occur rather than waiting for the next scheduled cycle.

Benefits of Event-Driven Process Orchestration

The adoption of event-driven process orchestration offers several compelling advantages for organizations. One of the most significant benefits is agility and flexibility. In environments where business conditions constantly change, an event-driven system allows organizations to adapt quickly to new circumstances.

Another key benefit is scalability. Event-driven architectures enable organizations to scale their systems without major disruptions. Because services are loosely coupled and communicate through events, it is easier to add new services or scale existing ones without impacting other components of the system. This decoupling of services allows businesses to handle increasing traffic or more complex workflows without significant reengineering.

Moreover, event-driven architectures improve resilience. In traditional systems, failure in one component may bring down the entire system, but in an event-driven architecture, each service is independent. If one service fails, it can be isolated and recovered without affecting the whole system. This fault tolerance ensures that the business processes continue to function smoothly, even in the face of failures.

Implementing Event-Driven Process Orchestration

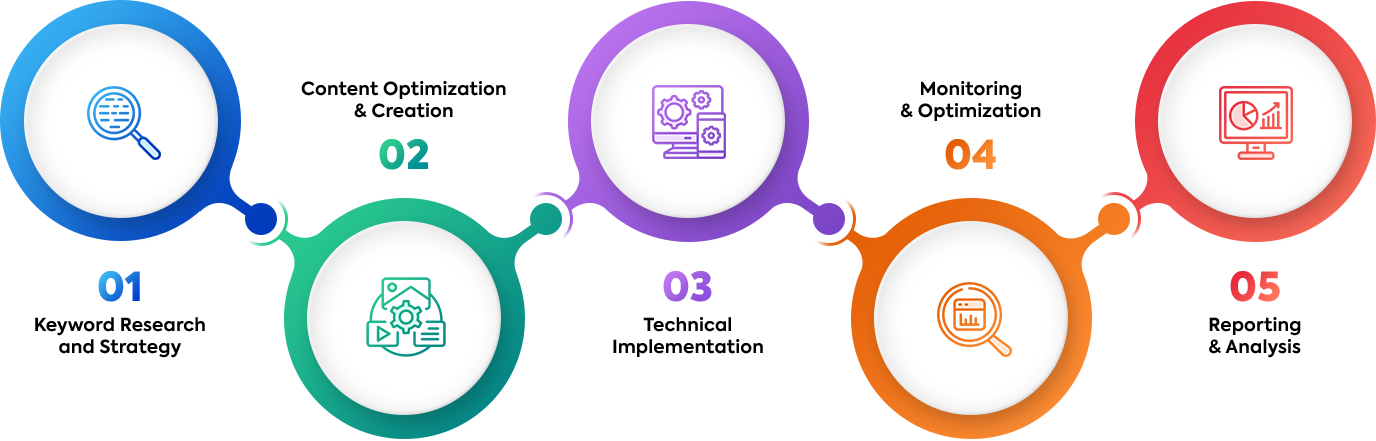

Implementing event-driven process orchestration requires the selection of appropriate tools and strategies that align with an organization’s goals. A primary tool in this implementation is event streaming platforms, such as Apache Kafka, Amazon Kinesis, or Google Cloud Pub/Sub. These platforms handle the event streams, ensuring that each service listens to and reacts to relevant events. By managing event flows, event streaming platforms decouple services, allowing them to remain independent and scalable.

Another important tool is BPMN (Business Process Model and Notation) engines. Tools like Camunda, Red Hat Process Automation Manager (RHPAM), and Activiti provide centralized orchestration of business processes. These engines manage the sequence of tasks within a workflow, coordinating with worker services via event streams to ensure that the process flows smoothly. By using BPMN engines, organizations can model complex workflows in a standardized format, providing a clear and accessible representation of their processes.

Many organizations choose to combine event streaming platforms with BPMN engines in a hybrid model. This approach allows for the centralized orchestration and coordination of tasks while leveraging the flexibility and scalability of event-driven systems. By using both tools in tandem, organizations can achieve a more balanced and robust architecture that ensures both agility and process management.

Overcoming Challenges in Event-Driven Process Orchestration

While event-driven process orchestration offers numerous benefits, it also presents challenges that practitioners must address to ensure successful implementation. One of the most common challenges is cohesion chaos. In a microservices-based architecture, services often operate independently, responding to events in isolation. If these services are not properly orchestrated, the overall system can become fragmented, leading to inefficiencies and errors. To avoid this, careful planning is required to define clear event flows, ensure reliable communication between services, and maintain coherence throughout the system.

Another challenge is the complexity of implementation and management. Building and managing an event-driven architecture is not a simple task, particularly in large organizations with multiple systems and services. Organizations must invest in training, tools, and monitoring systems to ensure that the architecture functions smoothly. The complexity also extends to testing and debugging, as tracing issues through event streams can be more difficult than traditional, request-response models.

Finally, data consistency can become a significant issue in event-driven architectures. Since services operate independently and communicate asynchronously, ensuring that data remains consistent across the system can be challenging. To address this, businesses must implement appropriate mechanisms for data synchronization, such as event sourcing or eventual consistency models, to ensure that the system operates correctly even when services are temporarily out of sync.

Real-World Applications of Event-Driven Process Orchestration

In the financial industry, for example, real-time event detection and processing are crucial for fraud detection, risk management, and automated trading systems. Event-driven systems allow these organizations to respond immediately to suspicious activities, market shifts, or customer transactions, ensuring that they remain competitive and secure.

In e-commerce, event-driven architectures play a significant role in managing customer orders, inventory, and delivery processes. When a customer places an order, events trigger workflows that check inventory, process payments, and schedule deliveries. These processes can run concurrently and adapt to new information, such as changes in stock levels or customer requests, ensuring that the business can provide an efficient and personalized service.

The healthcare industry also benefits from event-driven process orchestration. Patient monitoring systems, for instance, rely on sensors that trigger events when a patient’s vital signs change. These events can then trigger alerts, notify medical staff, or update patient records in real-time, ensuring that healthcare providers are equipped with the most current information for decision-making.

Future of Event-Driven Process Orchestration

As businesses increasingly adopt microservices, cloud computing, and automation, the demand for event-driven process orchestration will continue to grow. The future of this approach lies in further enhancing scalability, flexibility, and reliability. With advancements in event streaming technologies and process orchestration tools, organizations can expect more seamless integrations, better support for complex workflows, and improved fault tolerance.

Additionally, the rise of AI and machine learning will further enhance event-driven systems. By incorporating predictive analytics, these systems will be able to anticipate events before they occur, allowing businesses to be even more proactive in their response strategies. This will enable organizations to stay ahead of the curve, making smarter decisions and optimizing operations in ways that were previously unimaginable.

Conclusion

Event-driven process orchestration is a powerful approach for managing workflows in today’s fast-paced business environment. By adopting this strategy, businesses can achieve greater agility, scalability, and resilience in their operations. However, it’s essential to carefully plan and implement the necessary tools and strategies to overcome the challenges associated with this architecture. With the right combination of event streaming platforms, BPMN engines, and hybrid models, organizations can successfully orchestrate their processes and unlock new efficiencies.

Read also: The Future of DayTimeStar.com: Taipei Self-Driving Hharry